FaceDrive: Facial Expression Driven Operation to Control Virtual Supernumerary Robotic Arms

Abstract

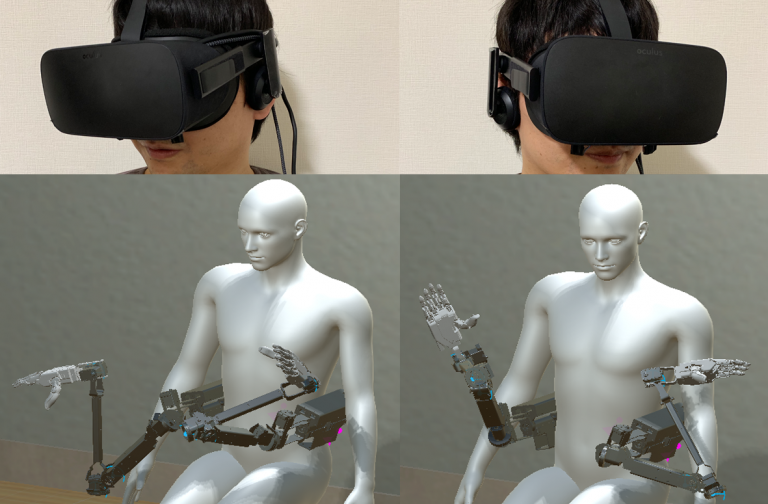

Supernumerary robotic arms are able to increase degree of freedoms which human has, but it requires a control method reflecting intention of the operator. To control the arms accord with the intention of the operator, we propose an operation method with his/her facial expressions. We propose to map facial expressions to supernumerary robotic arms operation. To measure facial expressions, we used a optical sensor-based approach (here inside a head-mounted display), the sensors data are fed to a SVM classifying them in facial expressions. The supernumerary robotic arms can then carry out operation by predicting the operator’s facial expressions. We made a virtual reality environment with supernumerary robotic arms and synchronizable avatar to investigate the most suitable mapping between facial expressions and supernumerary robotic arms.

Members

Masaaki Fukuoka,

Adrien Verhulst,

Fumihiko Nakamura,

Ryo Takizawa,

Katsutoshi Masai,

Michiteru Kitazaki,

Maki Sugimoto

Publication

Masaaki Fukuoka, Adrien Verhulst, Fumihiko Nakamura, Ryo Takizawa, Katsutoshi Masai, and Maki Sugimoto, FaceDrive: Facial Expressions Commands to Control Virtual Supernumerary Robotic Arms, SIGGRAPH Asia 2019 Extended Reality (SA ’19), pp. 9-10, 2019.11, URL:https://doi.org/10.1145/3355355.3361888

福岡 正彬, Adrien Verhulst, 中村 文彦, 滝澤 瞭, 正井 克俊, 北崎 充晃, 杉本 麻樹, FaceDrive: 顔表情による装着型ロボットアーム操作手法の提案, 日本バーチャルリアリティ学会論文誌 第25巻第4号, p.451-461, 2020.12, URL:https://doi.org/10.18974/tvrsj.25.4_451